How To Design Effective Language Tests: A Practical Guide For Educators

When language practitioners plan to assess students during or at the end of a teaching term, they have a wide range of options to choose from, such as giving a comprehensive examination, a short quiz, an essay assignment, a vocabulary/grammar test, or an oral presentation. They also often have varying autonomy for planning assessment from just following a prescribed plan (no autonomy) to using assessments of their choice, liking, or relevance to the teaching context (enhanced autonomy).

In any case, practitioners can make use of their language assessment literacy to inform, guide, or decide assessment, so that assessment is not conducted for sake of assessment, but as an effective practice based on the current approaches. The basic questions teachers should ask themselves are:

-

- what and how much to assess

- when to assess

- how to assess

- what to do regarding assessment outcome (e.g., test scores, GPA, or written feedback)

Decisions about all these aspects will result in an assessment plan, and to implement it, practitioners need to design tests or contribute to test design, depending upon the level of autonomy available to them. To begin with, we’ll discuss the following three important concepts: Measurement, Assessment, and Test.

Understanding the Difference: Measurement, Assessment, and Test

Measurement is “the assignment of a number representing a level of performance” in English language use related to reading, writing, listening, speaking, communicating, and other subskills. Assessment, however, is the overall judgment of a student’s English language ability and academic standing, which may or may not involve testing, by means of practitioners’ informal clues, mental notes, or everyday impressions as well as the interpretation of measurement data (e.g., test scores, attendance, and participation).

In this post, we refer to designing measurement through a test. A test is a planned, systematic, or structured activity, which can be designed in numerous forms, to elicit a specific language ability, behaviour, or proficiency level. “A language test is essentially a device or psychometric tool for measuring a person’s knowledge of and/or skill in using a language.” One important reminder: Despite enormous research and scholarship on testing in the field, there is no best test, only more or less useful and appropriate tests for specific situations!

Taking Initial Steps

The fundamental goal of an effective test is its quality of being a trustworthy measurement (i.e., reliable and valid). That means at the outcome of this test, the inferences you draw have a higher degree of accuracy (not completely “accurate,” though). Setting this goal is essential for all testing activities, big or small, whether an oral presentation or a grammar test.

It is a common practice that several testing activities are used over a teaching term to reach a decision about student achievement in the course or final grade. As we discuss in our upcoming book chapter on assessment from Bloomsbury Press, the initial step is to consider the following five-dimensional design guide when deciding about any test task/activity, even a small writing test or an oral mini-presentation, to assess students’ performance or learning.

Utilizing Design Guide for Tests

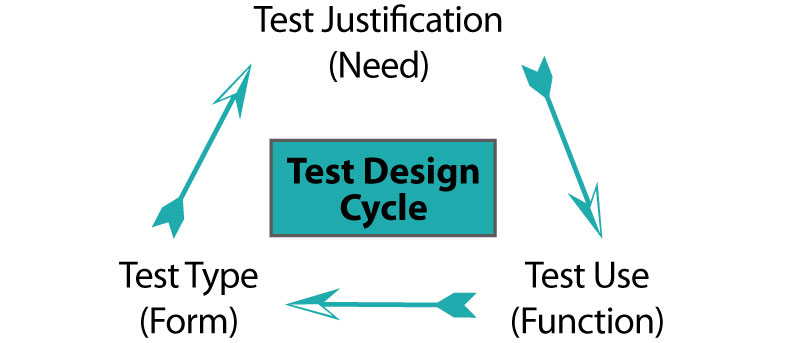

These initial steps involve establishing a solid foundation for a test through a cyclical process, in that each step informs the next:

-

- the justification of the need to test and a clear objective of a specific test (why),

- the argument for and benefit of choosing one test type over another (what), and

- the use of a test outcome to support interpretation, for achieving the objective (how).

The test design process is cyclical because the quality and impact improves incrementally if refined after its first implementation, which strengthens the foundation for effective testing.

The next stage is employing a basic Test Design Framework to make sure that a test is reliable and valid (see the next section) for the specific purposes or need. In other words, using a right form for the right function to meet the need for assessing students! The following framework, which is also from our upcoming book chapter on assessment, is a useful tool:

Objectives: Purpose of the test (e.g., proficiency, needs analysis, placement, or achievement)

Specifications: The most appropriate form of the test based on its objectives (e.g., item types, sections, length, difficulty level)

Frame: Reference point (e.g., norm-referenced, criterion-referenced, or alternative methods)

Audience: Intended test-takers of the specific test (e.g., student academic level, age, language background)

Medium and Level: Test delivery method (e.g., paper-based, online, hybrid) and targeted English proficiency level (e.g., beginner, low intermediate, advanced)

To document your decision about a test based on these dimensions, you can use and complete the following table. For example, if you decide to give your class an essay assignment on a given topic as one segment of the testing plan that includes all tests in a course or grade, your test design for each test task will document at least the following basic information:

Test Design Framework (Example)

|

Test Objectives |

Test Specifications |

Frame of Reference |

Audience and Context |

Test Medium and Level |

|

An English proficiency test with a goal to measure proficiency in general writing skills in Week 7 |

A 450-word, three paragraph narrative essay with an outline on a recent family camping trip; time 2 hours |

Criterion-referenced (measuring performance against a specific standard/learning outcome; not comparing it to the performance of others) |

Grade 8 students at a public school in an EFL context in a rural region |

On-site, pen and paper delivery; electronic/paper dictionaries allowed; Intermediate English proficiency level |

Ensuring the Trustworthiness of Tests

Scores are finalized at the end of a test, for example 7.5 out of 10 for a student. What exactly does a score mean for student learning in a class? How accurate is this score in representing a level of achievement of a student?

We need to have a concrete response to these fundamental questions, if the test outcome is to be trustworthy, rather than having any subjective or anecdotal explanation. Therefore, two features are essential to effective language testing and its ethical use: First, test reliability (i.e., the consistency and dependability of test scores) and, second, test validity (i.e., the appropriateness of inferences, uses, and decisions based on test scores).

Reliability is a crucial feature of any test, and validity is important for establishing the trustworthiness of a test. Reliability refers to the ability of a test to produce the same or very similar results each time it is administered. To help ensure the reliability of a test, teachers need to

- use a clear scoring rubric with specific criteria,

- make instructions and items unambiguous,

- provide a range of items, and

- pilot as well as revise a test (e.g., through the Test Design Cycle).

With regard to validity, two types of validity are criterion-related validity, which shows how well test scores relate to scores on other tests, and content-related validity, which represent how accurately the test measures the target skill area. In the field of testing, a general assumption is that a test which is not reliable is unlikely to be valid. So, “the key question is not whether a test is valid but, rather, for what purposes it is valid.” For example, a test designed to measure vocabulary knowledge may not be valid as a measure of general language ability.

Another related feature is authenticity, which is aligning a test task to the conditions of real-life uses of the language being tested. Using tasks which are as authentic as possible in a test strengthens the validity feature. For example, to assess writing skills in a Grade 10 English class, asking students to write an email for a specific situation or a review of their favorite movie has higher validity than giving a multiple-choice grammar and vocabulary quiz.

Test Design Action Plan

To put this test design into practice, we suggest two simple and practical steps:

-

- Utilize the Test Design Framework for all your existing tests and see what picture appears in terms of trustworthiness.

- Seek a review by two or three teaching colleagues on your test design. This self-reflection and peer-review will help strengthen your language testing practices!

Designing effective assessments doesn’t have to be overwhelming. With a solid understanding of key concepts like reliability, validity, and authenticity—alongside a practical test design framework—you can create meaningful and trustworthy evaluation tools for your multilingual learners of English.